|

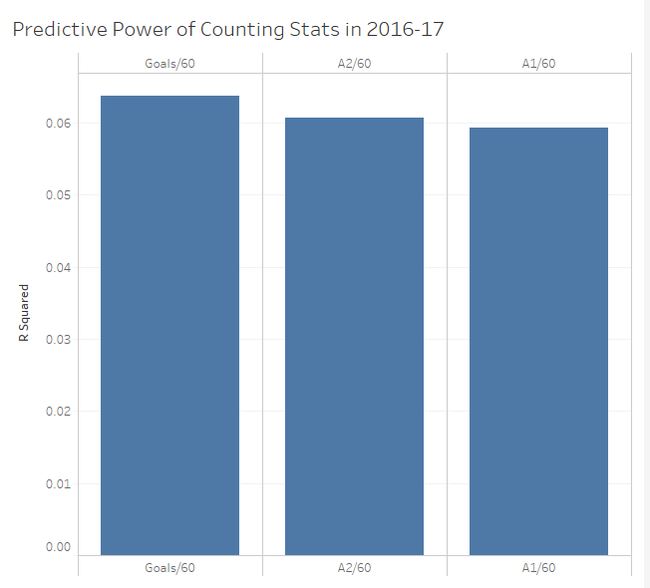

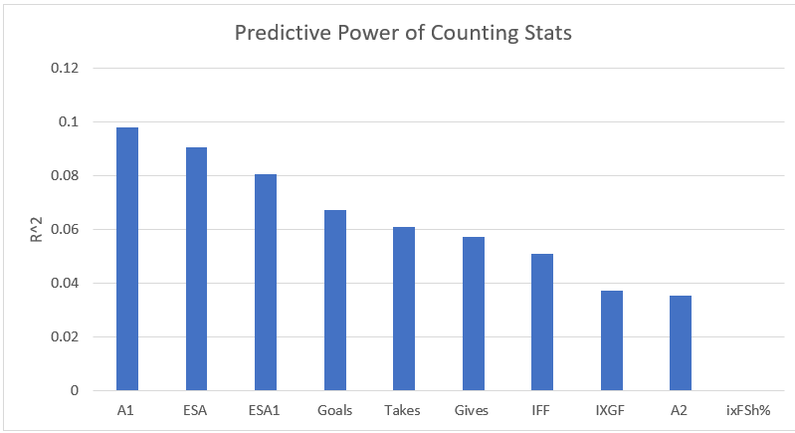

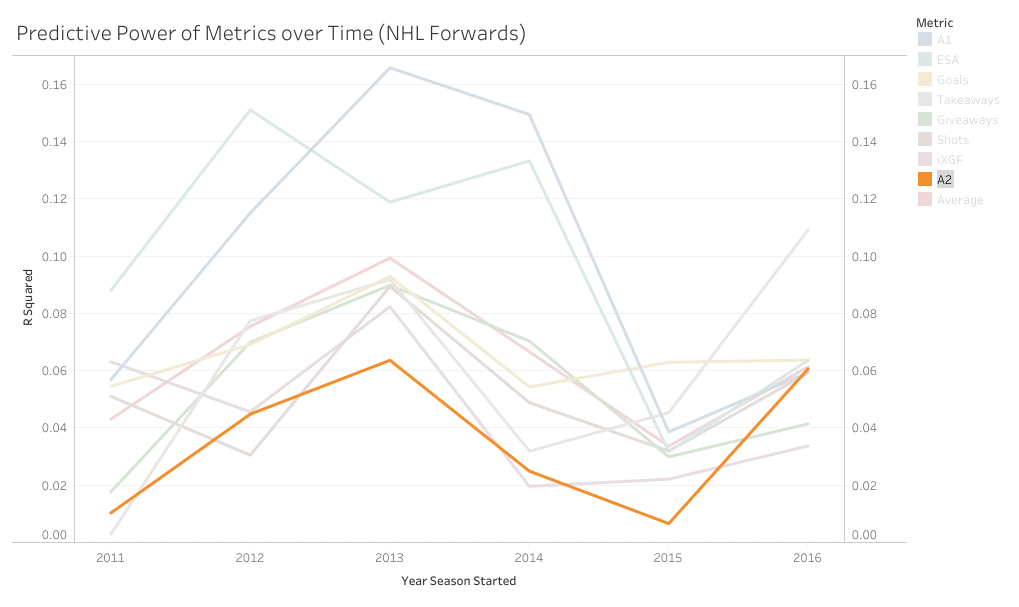

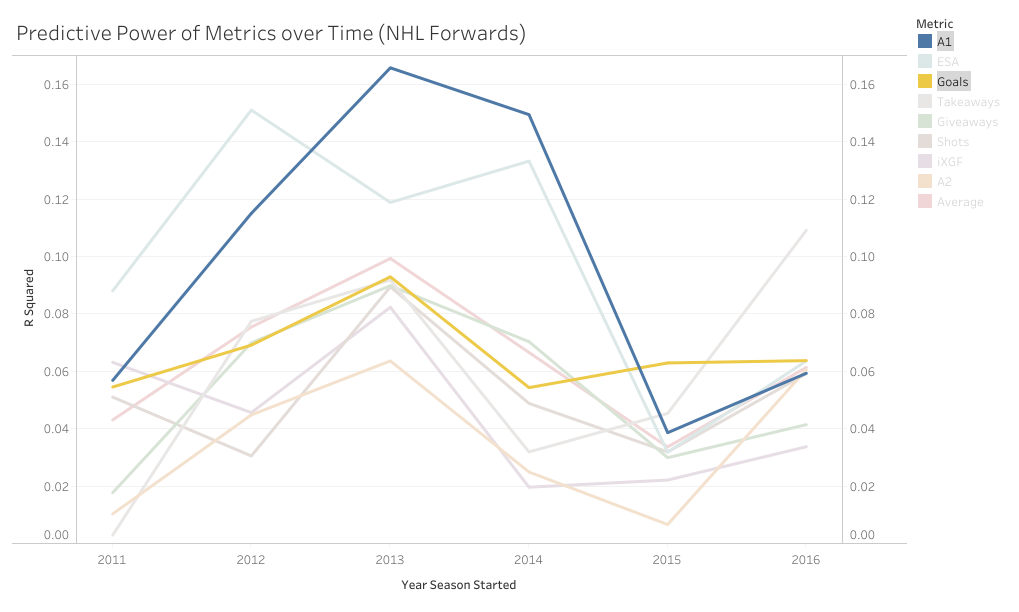

So picture this. You're hanging out with some friends and a debate starts, "what's more important, goals or primary assists?". There are many ways to go about answering this question, but many in hockey analytics will look for how predictive each metric is, or how highly correlated each one is with future on-ice goals for. So you pull up Corsica to answer that question. First, you grab the counting stats of all the forwards who played at least 500 5v5 minutes in 2016-17, then you grab the adjusted on-ice relative to teammate goals for per hour of all the forwards who played at least 500 5v5 minutes in 2017-18. Next you delete all the players who didn't play 500 minutes in both of the seasons, now you have a decent sample of over 250 forwards, and finally you can just line the two up against each-other and easily check the correlations for how predictive each metric was and use that to argue you know what the best counting stat is. However, if you do this, the results will surprise you. If all I gave you was goals and primary assists, this could look pretty reasonable. Goals slightly outperformed primary assists in 16-17. However, the addition of secondary assists is where it gets weird. Secondary assists were actually slightly more predictive than primary assists. The more familiar you are with hockey statistics, the more surprising this probably is. Eric Tulsky showed how secondary assists aren't nearly as repeatable as primary assists back when I was in middle school, so secondary assists being inferior to primary assists hasn't been a controversial take for a while now. So what happened? Well, the obvious answer is noise. Everybody understands that hockey statistics are noisy, however, I'm not sure everyone understands just how noisy they can be. Even with a 250+ player sample, the signal is overwhelmed by the noise. This becomes obvious when you extend the sample to every back to back season since 2011. (If your unfamiliar with some of the stat's below, I talk about it in my BPM explainer). With the extended sample, the results makes a lot more sense. Primary assists are the most predictive, then goals, and finally a massive drop-off to secondary assists. So, even though you can technically use evidence to show that secondary assists are more predictive than primary assists, you would be wrong. And the problem is, this happens way more than you might think. With help from Bill Comeau there's now an interactive tableau showing how predictive each metric has been over the years so you can see for yourself how much noise there is in the predictive value of hockey statistics. It looks like a mess altogether, but if you highlight secondary assists you can see that even though they are the "worst" metric on aggregate, they have only been the worst metric 2 out of 6 times. I chose secondary assists because they are generally viewed as useless in the analytics community, but as you can see, this happens with other metrics too. Look at goals and primary assists for another example. This works well because goals have outperformed primary assists in two straight seasons with hundreds of players in the sample, at which point people will likely become very confident in the correlations. Thankfully with these counting stats we can easily cite a larger sample of data and show people why they are still probably better off weighing primary assists more heavily than goals, but unfortunately, we are not always going to have that luxury.

I'm referring to what feels like the new wave in hockey analytics, micro-statistics (I'm talking about hand tracked data, but the general message will apply to the first few seasons of the NHL's tracking data too). More and more often it feels like we are seeing people hand track data to learn about the game. From macro-scale projects like Corey Sznajder and companies All Three Zones Project to more Micro-scale projects like Harman Dayal tracking the Vancouver Canucks. This sort of data seems to be where the analytics community is trending. Of course, the more project's like these the better. It's awesome that we now have the ability to dig deeper into transition data to show how well Erik Karlsson exit's the zone or to quantify some players ability to apply pressure on the forecheck. This analysis might just be the future of hockey analytics, and hey, the best way to learn is to try. But as more and more of this data collected, we will inevitably zero in on how predictive each of the metrics prove to be. This has started already thanks to CJ Turtoro's RITSAC presentation where he used the all three zones project's data on the Blue Jackets, Stars and Flyers to show that the public micro stats were more predictive of future goals than traditional metrics like Corsi and xG (For defenders). It's great to see a signal like this, but it's important to urge caution even if we continue to see promising results like these in the future. Because even full seasons worth of data on the entire league can lead you astray, just like secondary assists would have in 2016-17. Sadly tracking games takes hours from extremely committed people, so we likely won't have the luxury of league-wide data sets for a while, making the already difficult task even more challenging. So yes all this new data is exciting and it's awesome that the early results at promising, but it's going to take a much longer time than most people would like before we can be confident which metrics are most predictive of future results, and until then it's good to be cautious with testing results.

0 Comments

|

AuthorChace- Shooters Shoot Archives

November 2021

Categories |

RSS Feed

RSS Feed